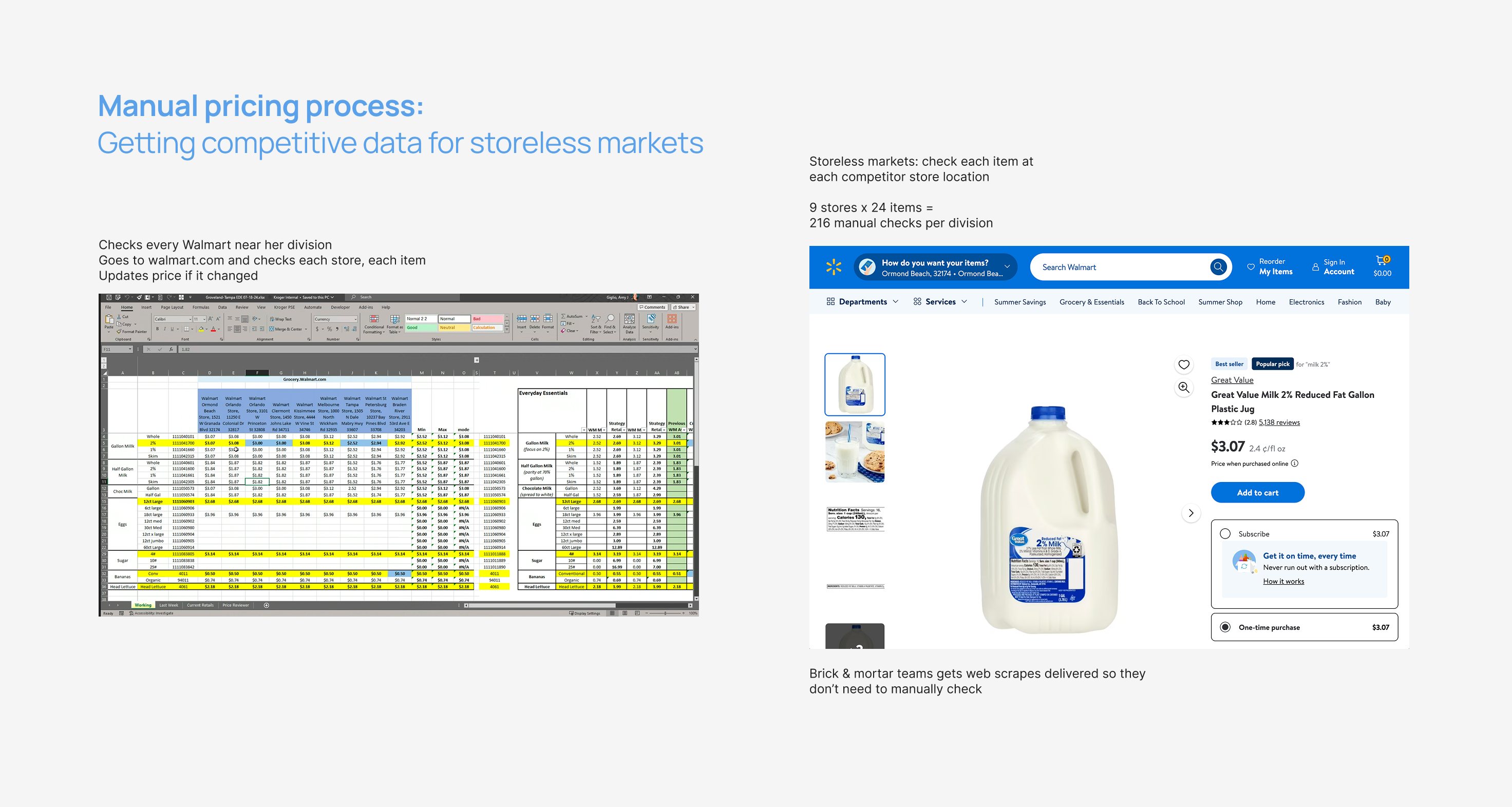

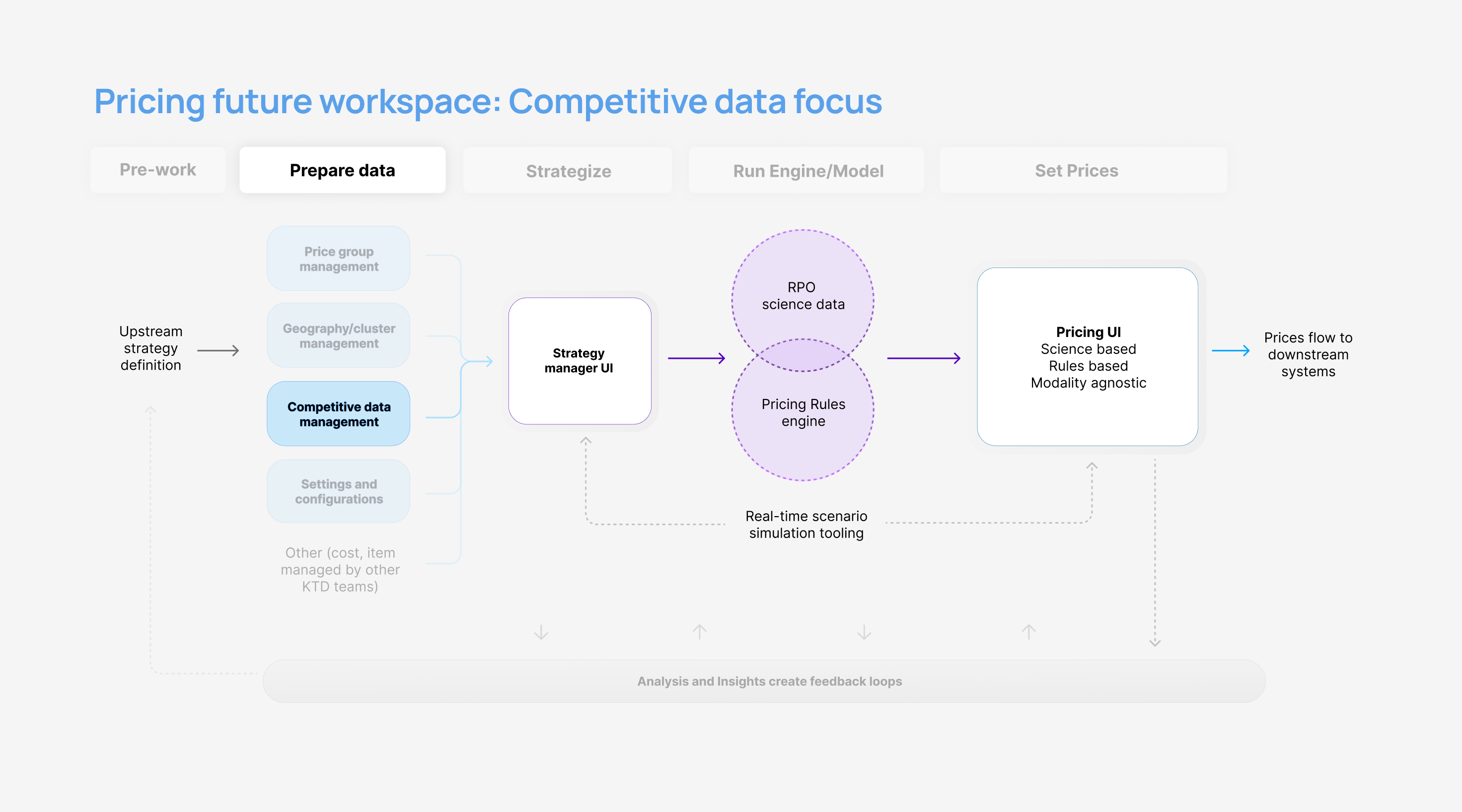

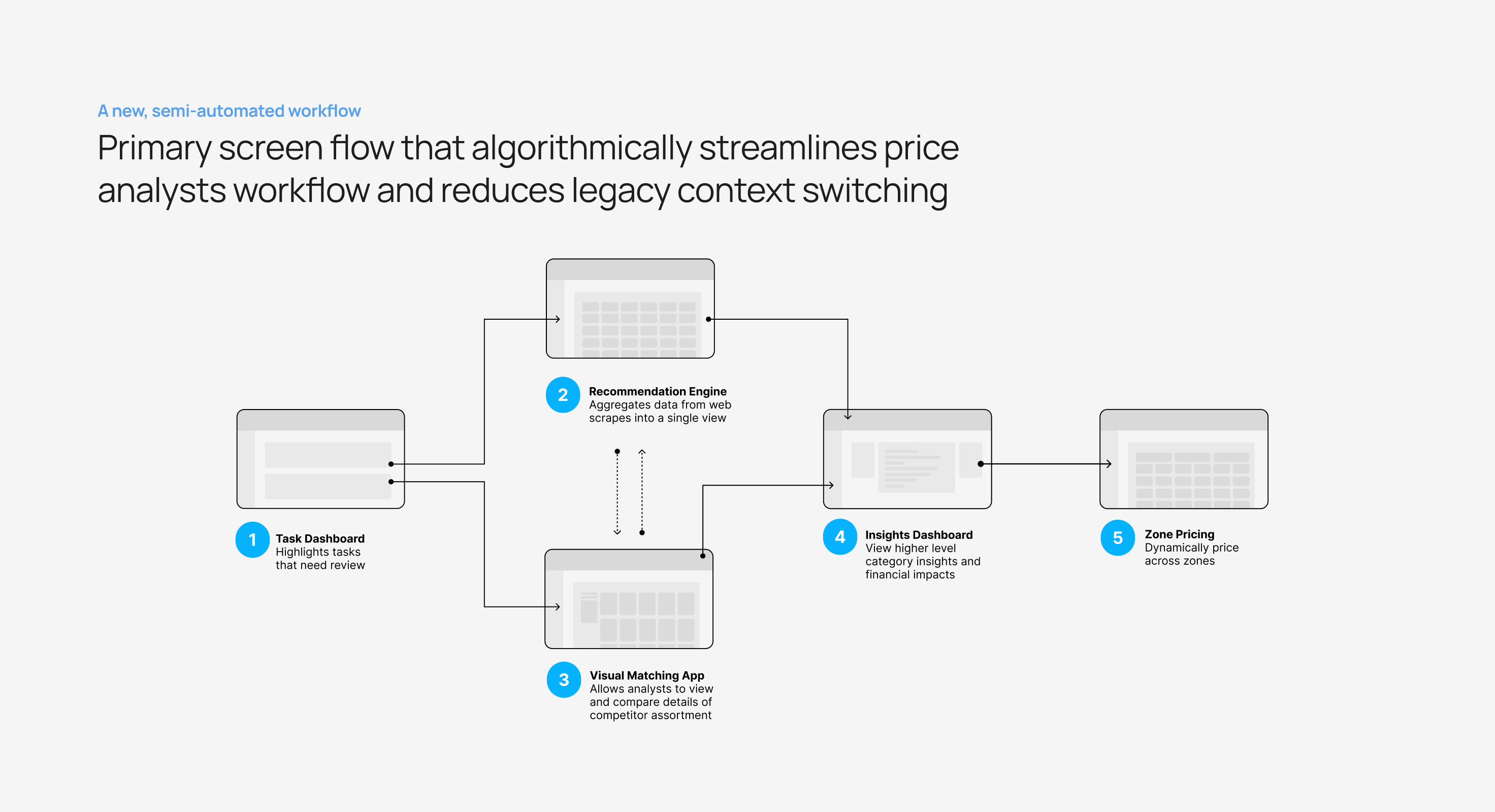

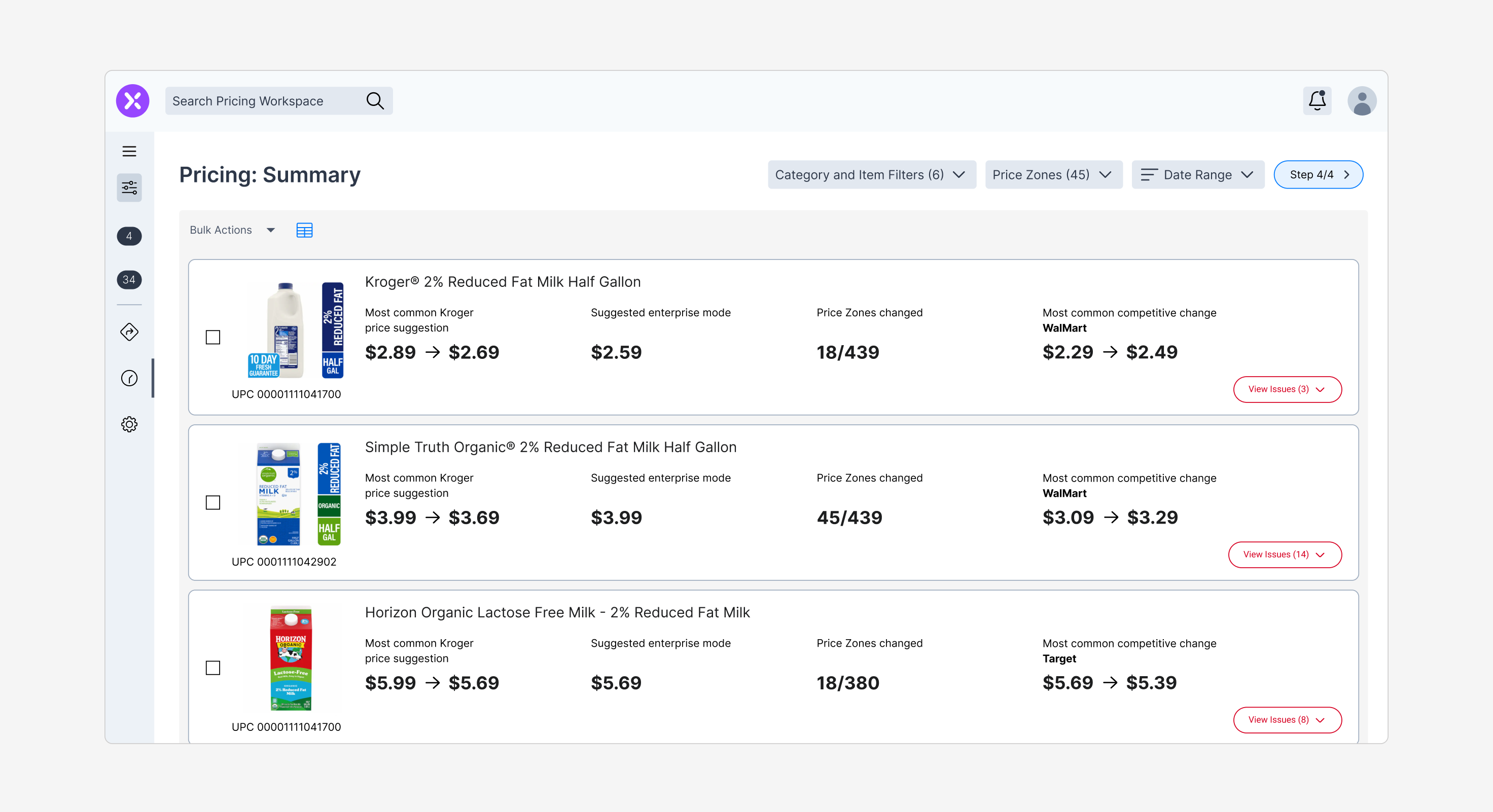

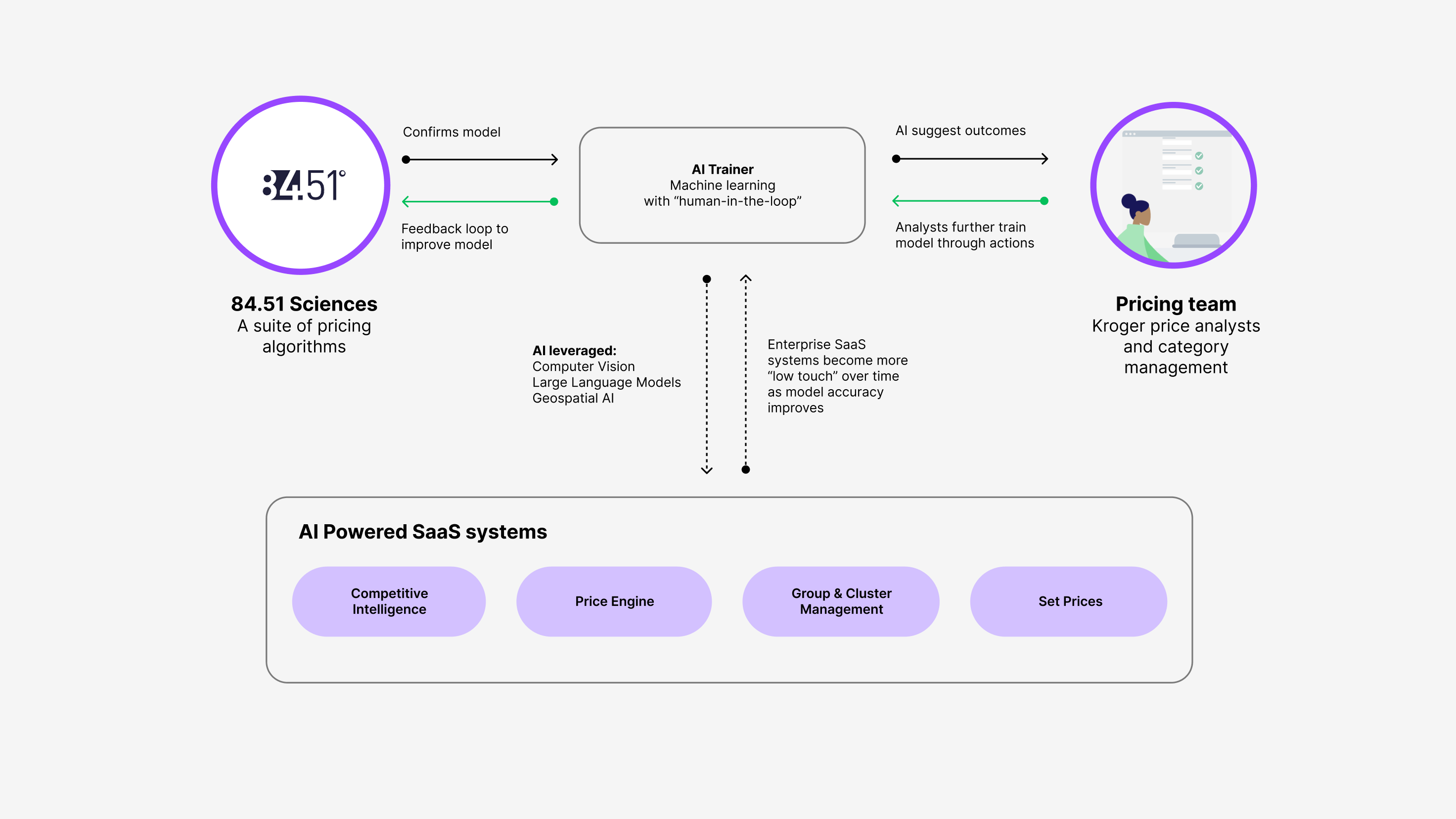

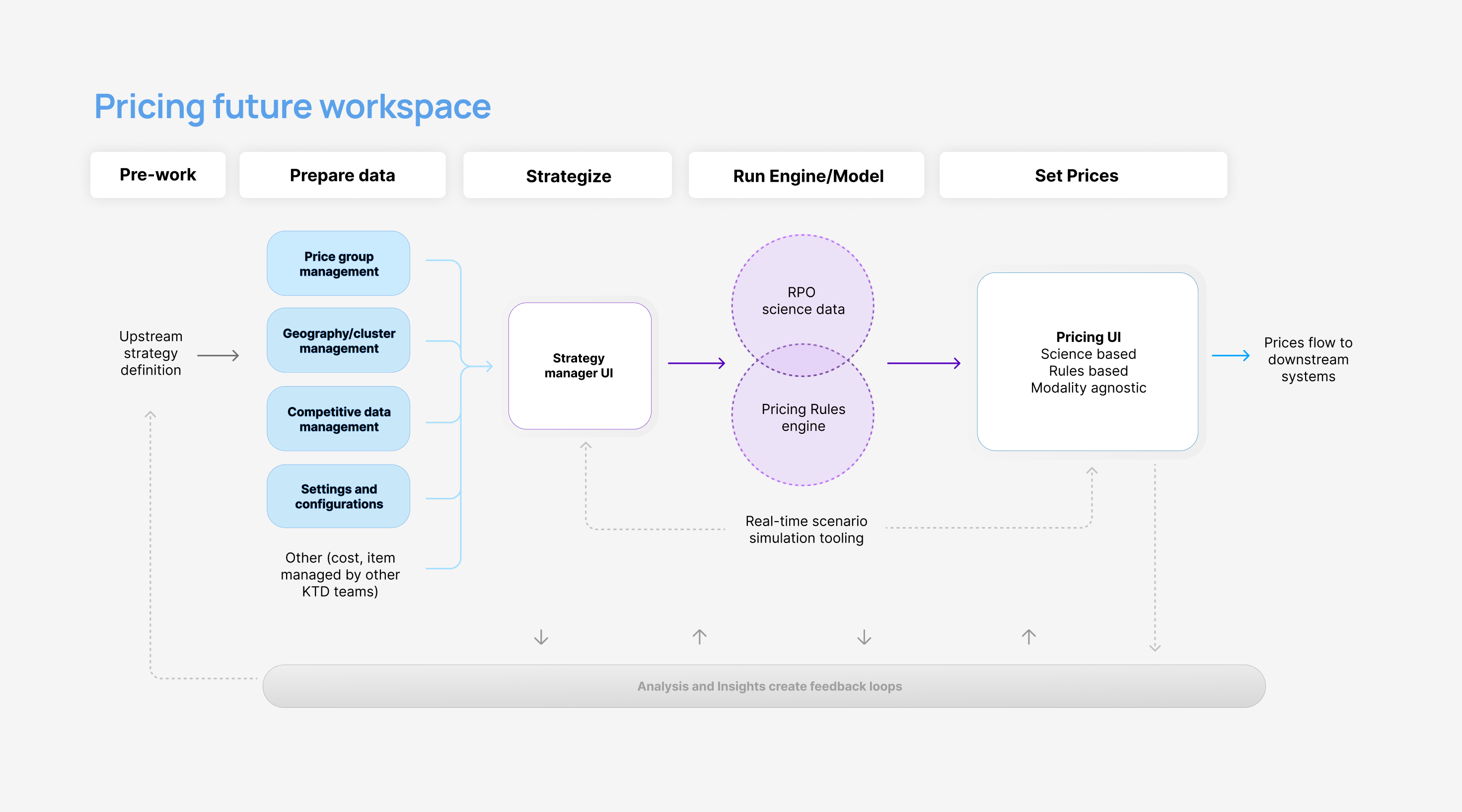

As a Product Design Manager at Kroger, I led a team of designers across five products, focusing on aligning workflows, automating processes, and refining product strategy. The case study explores a redesign of Kroger’s pricing systems, which initially relied heavily on manual labor and outdated processes, aiming to modernize them through data automation and strategic design.